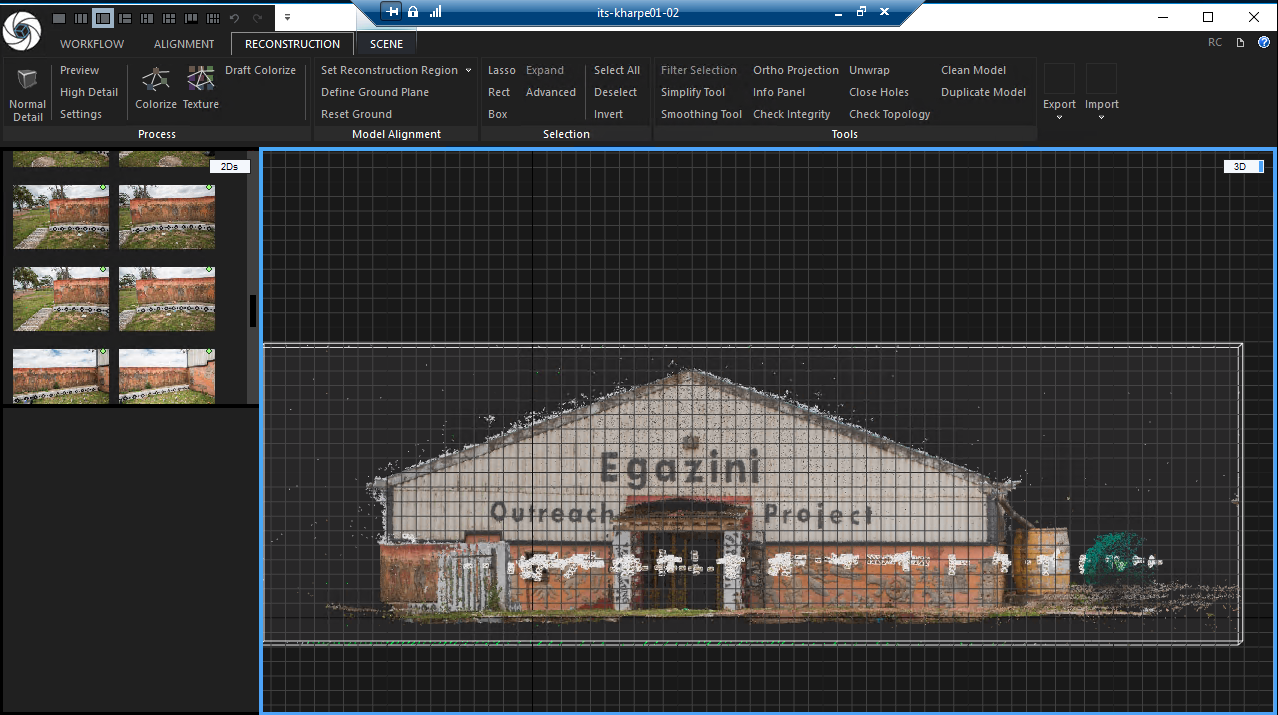

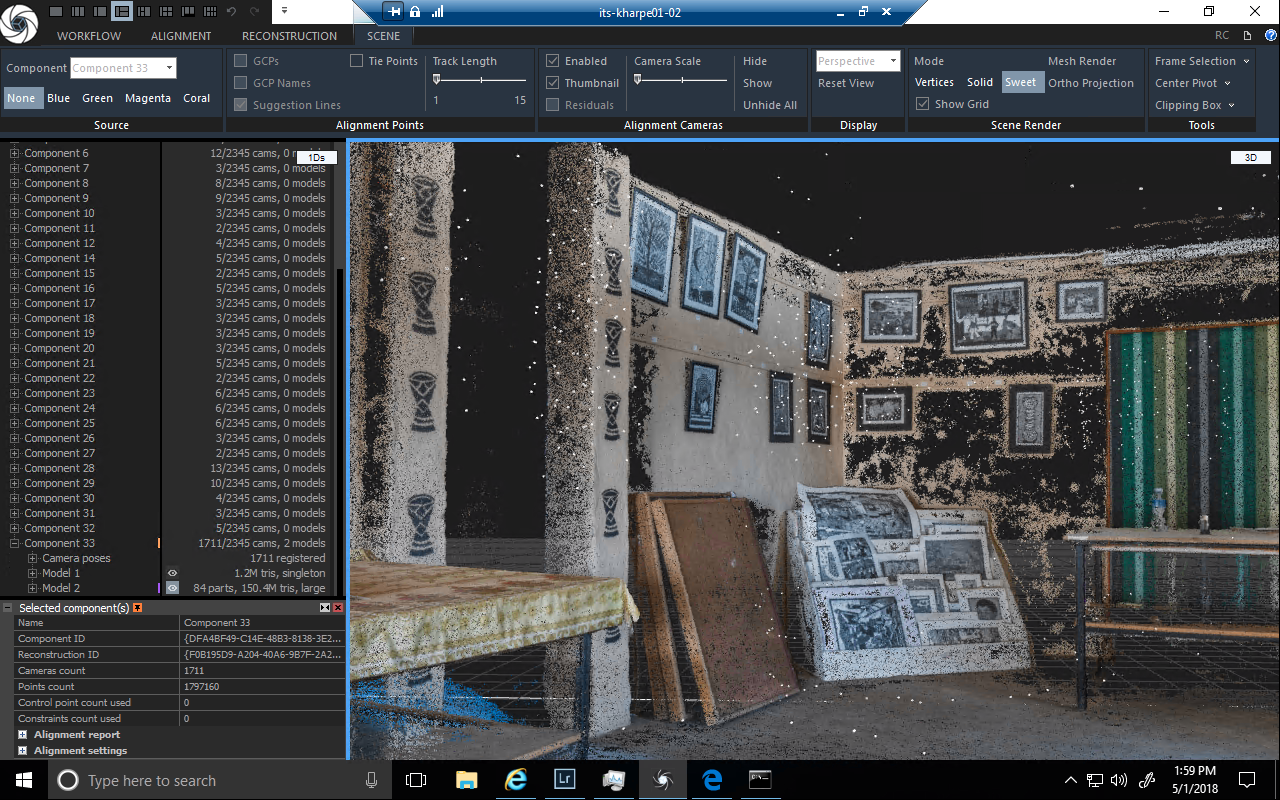

What is a Graphics Processor Unit (GPU) Cluster and why was it developed?

SUrge was developed to assist researchers with computational research tasks that can take substantive advantage of the speed-up GPU’s can provide. We developed the cloud methodology to make allocating the GPU’s to a number of different workloads a simple process.

Access to GPUs will enhance research opportunities for Syracuse students. Undergraduates and graduate students will gain practical experience with cutting-edge computing architectures.

NSF award enhances Syracuse University computational capabilities through GPU cluster