Naylor’s case study, Gaussian Process Regression for FX Forecasting, which is available on GitHub, demonstrates how quantitative analysis can be used on the buy-side to produce a new forecasting model.

Charles, can you tell us a bit more about yourself?

I’m a student in the new online Applied Data Science Masters program at Syracuse. I had my undergrad in Economics from Columbia back in 2003. I’ve spent the last 10 years working in NYC as a quantitative analyst for a Global Macro hedge fund (our main funds were called L-Plus and GDAA, but they were never marketed in America) owned by Nikko Asset Management, a Japanese asset manager. In my capacity as an analyst and trader at this fund, I worked to improve our forecasting algorithm for Foreign Exchange (FX) and sovereign bond derivatives. We ran into some difficulties in the market in the last few years, and our parent company dissolved the management team.

How did you become interested in analysis of FX forecasts?

So, I’ve taken a professional interest in FX forecasting for a number of years. In my old job, however, I was constrained to follow the basic algorithm (which was essentially a set of enhancements to the Kalman Filter) which had been established long before I was hired. Since the algorithm we used was developed, the tools available to a statistician have become vastly more powerful, in both raw processing power and the levels of abstraction available. Stan in particular was a revelation for me, as I’m not great at Calculus, and it permits the developer to specify arbitrary prior distributions for variables without needing to calculate any derivatives. I’m primarily interested in the application of new forecasting techniques to FX.

Tell us, how are FX forecasts used and by whom?

FX forecasts would be used by anyone with exposure to foreign currencies. So, for example, companies with overseas sales can lose money if their home currency strengthens before they repatriate earnings. They will want to hedge that risk using derivatives. On the other side, speculators will deliberately take exposure to currency movements to earn a profit. Before interest rates hit the floor, the carry trade was popular, in which an investor borrows money in a currency with a low interest rate, like Japanese Yen, then lends it out in a currency with a higher interest rate, like Australian Dollars. That investor pockets the difference in rates, but could lose money if the Yen strengthens against the Australian Dollar in the interim.

Ultimately, what are the goals of your analysis and the resulting forecasting models?

Primarily, I wanted to evangelize generative modeling. I believe there is a lot of misplaced hope in Finance that the new innovations in machine learning will solve some of the challenges of forecasting asset returns. The techniques tend to focus on adapting classification models to a continuous space. The results are difficult to interpret, and hence it’s difficult to quantify what’s wrong with them. For reasons I go into in the analysis, primarily to do with the indeterminacy of complex adaptive systems, it’s unlikely that anyone can produce an asset return forecast that’s both accurate and precise. Generative models encourage analysts to look at the whole posterior distribution rather than a point estimate, and consequently to consider the vast number of ways things could have played out differently in the markets.

That said, I also believe we are, slowly, returning to the set of market conditions in which these types of forecasting models are likely to be successful in FX. Long term interest rates have fallen for thirty years, and I think we are finally past rock bottom.

What is your hope for how this documentation will be used?

I’m hoping first to spark more interest in generative modeling among the Finance community, and second to demonstrate what I believe are best practices for robust, repeatable analysis. I’m also, frankly, looking for work at the moment, and as all my prior analyses have been proprietary, I wanted something free and clear of IP concerns that I could point employers to.

Github information and documentation

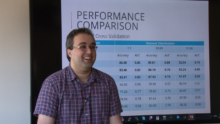

What SU computing resource(s) did you use? Can you compare how this worked vs. your previous analysis methods?

I made use of SU’s OrangeGrid (HTCondor) grid computing network to perform a large backtest of my forecasts with weekly periodicity. In the past, I simply couldn’t perform such a thorough backtest. I used to fit a single complicated model, then backtest subsequent periods using a simplified version that rested on assumptions derived from the single complicated run. I also only ever used monthly periods. Without the resources of Syracuse at my disposal, this analysis simply wouldn’t have been feasible.

What is next in your research?

I am eagerly awaiting some innovations in the Stan programming language that should let me fit something more computationally difficult, so that I could use fat-tailed priors rather than Gaussian distributions for the FX returns. I would also like to apply similar techniques to a more tractable problem in finance, perhaps doing a non-linear GP regression on equity returns, or equity sector returns, for example. These would be more useful for non-professionals, as retail FX trading is a real minefield. I would also like to round off this particular project by demonstrating a series of optimized portfolio weights on the basis of the forecast.

Yahoo Labs has made an initial donation of 120 servers, or about 1,000 cores, that have been installed as part of the University’s Research Computing facilities in Machinery Hall.

Yahoo Labs has made an initial donation of 120 servers, or about 1,000 cores, that have been installed as part of the University’s Research Computing facilities in Machinery Hall.

Software License / Cost:

Software License / Cost: